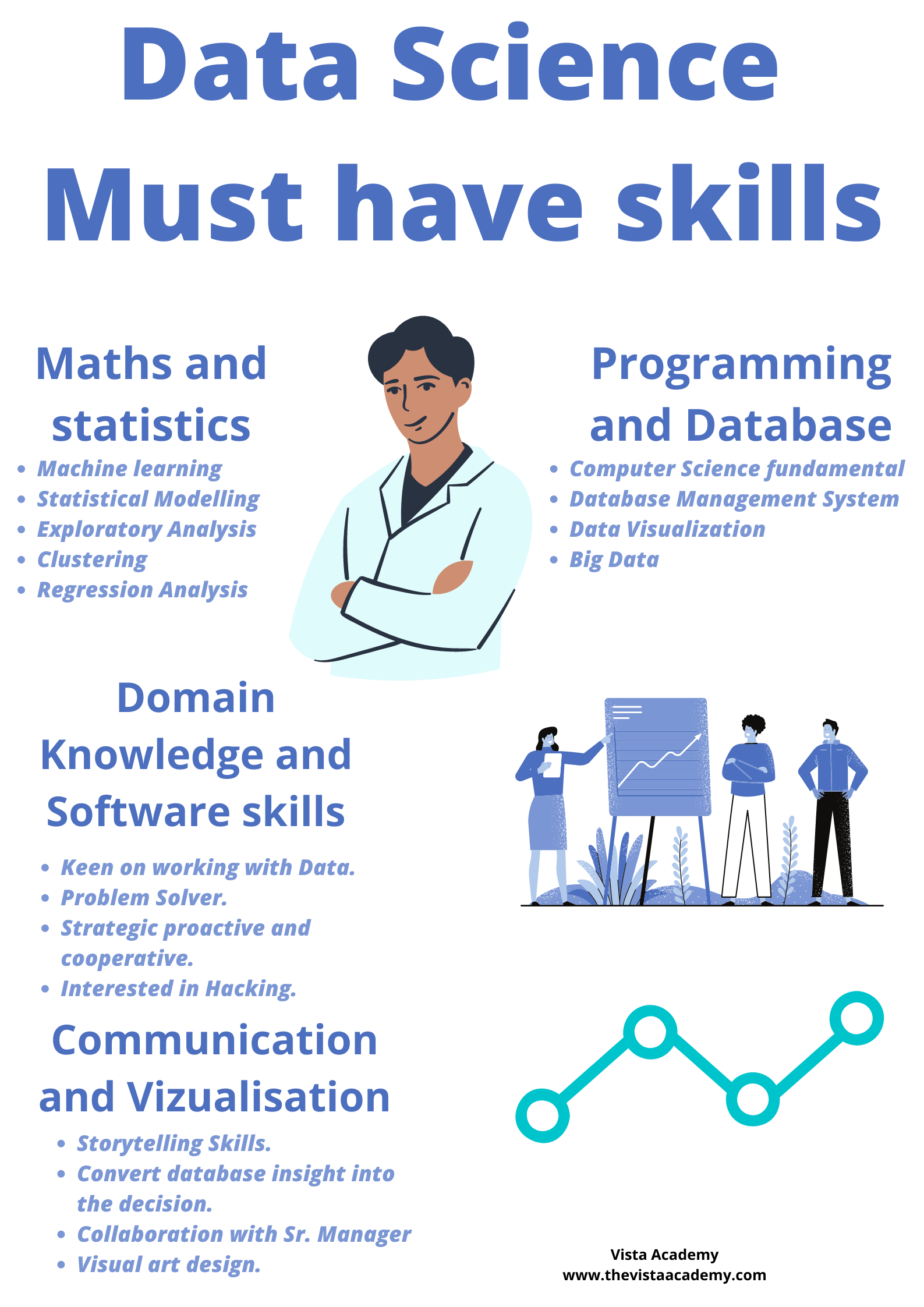

Data Science Must have skills Need to be a Data Scientist

Data Scientists are responsible for sharing their findings with key stakeholders, so these roles require someone who is not only adept at handling the data, but who can also translate and communicate findings across the organization

Table of Contents

ToggleIs math a part of data science?

Mathematical knowledge is necessary for data science careers because machine learning algorithms, data analysis, and insight discovery all depend on it. Although there are other requirements for your degree and employment in data science, math is frequently one of the most crucial.

Math difficult in data science?

The fact is, very little math is actually needed for actual data research. A significant amount of practical data science just requires talent in utilising the appropriate tools, although it does involve some (which we’ll discuss in a moment). Understanding the intricate mathematical aspects of such technologies is not always necessary for data science.

Which math competencies are required for data science?

Arithmetic

Arithmetic, the math we learn in school, is the foundation of practically all other math and is crucial to data science. The study of numbers and the operations we perform on them, such as addition, subtraction, multiplication, and division, is known as arithmetic.

Linear Algebra

This type of algebra focuses on vector spaces, matrices (the plural form of matrix), and linear equations. Math is given a boost by linear algebra for use in geometry, physics, and engineering.

Geometry

A protractor, compass, or set square have all been used at some point, so you have some knowledge of geometry. It involves measuring the lengths, areas, and volumes of things to determine their form, size, and relative locations. Some of geometry’s theorems, including that all right angles are equal and that a straight line is always the shortest distance between any two locations, may come to mind.

Calculus

We’ll need to watch out that we don’t get off topic at this point. Calculus, which studies continuous change, calculates the area under a curve as well as the rate at which the slope of a curve changes. The closest straight line to a curve at any point is called a tangent.

Additionally a component of mathematics, logarithms are responsible for the dynamics of binary search algorithms.

Statistics.

It is important to know key terms like mean, median, mode, maximum likelihood indicators, standard deviation, and distributions. Data scientists should understand sampling techniques and how to avoid bias in experiments. Descriptive statistics paint a picture of the data through charts and graphs, while inferential statistics help you make predictions using that data.

Probability.

Probability helps you perform statistical tests, so you can tell if you are truly uncovering meaningful trends in the data

Linear algebra.

Linear algebra is the backbone of important algorithms, and knowledge of matrices and vectors will definitely help, especially if you specialize more in machine learning.

Multivariate calculus.

Brush up on mean value theorems, gradient, derivatives, limits, the product, and chain rules, Taylor series, and beta and gamma functions. regression algorithms and may face calculus problems in interviews.

Programming and Database

To move from the theoretical into creating practical applications, a Data Scientist needs strong programming skills.

Most businesses will expect you to know both Python and R, as well as other programming languages.

Object-oriented programming, basic syntax, and functions, flow control statements as well as libraries and documentation all fall under this umbrella.

You need to be proficient in SQL as a data scientist.

This is because SQL is specifically designed to help you access, communicate and work on data. It gives you insights when you use it to query a database.

Domain Knowledge

A Data Scientist should keep in mind, can be defined in context to the —

The source problem, the business is trying to resolve and/or capitalize on.

The set of specialized information or expertise held by the business.

The exact know-how, for domain specific data collection mechanisms.

Extraction, transformation, and loading of data:

Assume we have several data sources, such as MySQL, MongoDB, and Google Analytics. You must extract data from such sources and then modify it so that it may be stored in a format suitable or structure for querying and analysis. Finally, you must import the data into the Data Warehouse, which will be used to analyse it. Data Science may be a suitable career choice for persons with an ETL (Extract, Transform, and Load) background.

Data Wrangling and Data Exploration

You have data in the warehouse, but it is unclear. As a result, Data Wrangling is the process of cleaning and unifying messy and complex data sets for easy access and analysis. The first step in your data analysis process is exploratory data analysis (EDA). Here, you make sense of the data you have and then decide what questions to ask and how to frame them, as well as how to best manipulate your available data sources to get the answers you need.

Communication and Vizualisation

Data visualization is a key component of being a Data Scientist as you need to be able to effectively communicate key messaging and get buy in for proposed solutions.

Understanding how to break down complex data into smaller, digestible pieces as well as using a variety of visual aids (charts, graphs, and more) is one skill any Data Scientist will need to be proficient in order to advance career-wise.

Data doesn’t communicate without someone manipulating it to be able to do so, which means an effective Data Scientist needs to have strong communication skills.

Whether it’s disseminating to your team what steps you want to follow to get from A to B with the data, or giving a presentation to business leadership, communication can make all the difference in the outcome of a project.

MACHINE LEARNING

MACHINE LEARNING (ML) is an add-on to the Data Scientist skill set for firms that manage and operate massive volumes of data and operate on a data-centric decision-making process. Data modelling is aided by machine learning, which is a subset of AI. K-nearest neighbours, Random Forests, Naive Bayes, and Regression Models are some of the methods used.

DEEP LEARNING

IN-DEPTH LEARNING

It is a type of Machine Learning that is more advanced. Deep Learning models are being used by every company nowadays since they have the capacity to overcome the constraints of standard Machine Learning methodologies. Fundamentals of Neural Networks, the libraries used to create Deep Learning models such as Tensorflow or Keras, and how Convolutional Neural Networks, Recurrent Neural Networks, RBM, and Autoencoders function are among the other abilities required for Data Scientist positions.

DATA SCIENCE TOOLS

To get a job as a Data Scientist, you must have hands-on experience with most-sought-after data science tools such as MS Excel, Python or R, Hadoop, Spark, Tableau, and more.

BIG DATA

In the field of data science, the term “big data” is becoming increasingly widespread. It has become a critical requirement for businesses because it helps them make better business decisions and gives them an advantage over their competition.

PROBLEM SOLVING

One of the inherent skills required to become a Data Scientist is having an appetite for solving real-world problems. A Data Scientist needs to productively approach a problem, which means you must develop the art of calculating the risks associated with specific business models.

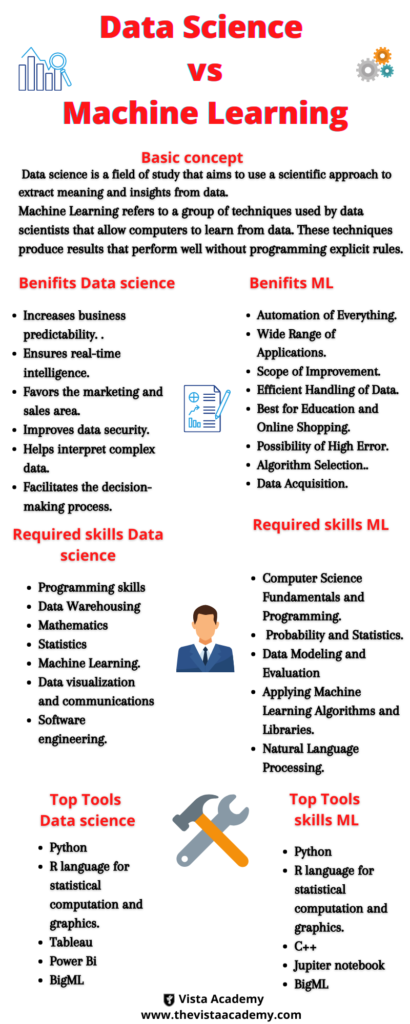

BASIC CONCEPT OF DATA SCIENCE AND MACHINE LEARNING

Data Science is a field that is a combination of statistical methods, modeling techniques, and programming knowledge. On the one hand, a data scientist has to analyze the data to get the hidden insights and then apply the various algorithms to create a machine learning model.

Machine Learning is a subset of AI where the machine is trained to learn from it’s past experience. The past experience is developed through the data collected. Then it combines with algorithms such as Naïve Bayes, Support Vector Machine(SVM) to deliver the final results.

The key objective of machine learning is to empower computers to learn automatically without requiring ongoing human assistance or churning

BENEFITS OF DATA SCIENCE AND MACHINE LEARNING

BENEFITS OF DATA SCIENCE

Helps the marketing and sales area

Data-driven Marketing is a universal term nowadays.

The reason is simple: only with data, we can offer solutions, communications, and products that are genuinely in line with customer expectations and needs.the entire customer journey map considering all the touchpoints your customer had with your brand? This is possible with Data Science.

Enhance Data security.

The data scientists work on fraud prevention systems, for example, to keep your company’s customers safer. On the other hand, he can also study recurring patterns of behavior in a company’s systems to identify possible architectural flaws.

Helps to clarify complex data

When we want to cross different data to understand the business data science comes as great solution

and it makes market better. Depending on the tools we use to collect data, we can mix data from “physical” and virtual sources for better visualization.

It confirms real-time intelligence

The data scientist can work with RPA professionals to identify the different data sources of their business and create automated dashboards, which search all this data in real-time in an integrated manner.

Increase Business forecast

With the help of the data scientist, it is possible to use technologies such as Machine Learning and Artificial Intelligence to work with the data that the company has and, in this way, carry out more precise analyses of what is to come.

BENEFITS OF MACHINE LEARNING

Automation of Everything

Machine Learning is responsible for cutting the workload and time. By automating things we let the algorithm do the hard work for us. Automation is now being done almost everywhere. The reason is that it is very reliable. Also, it helps us to think more creatively.

Wide range of applications

Machine Learning is used in every industry these days, for example, from Defense to Education. Companies generate profits, cut costs, automate, predict the future, analyze trends and patterns from the past data, and many more. Applications like GPS Tracking for traffic, Email spam filtering, text prediction, spell check and correction, etc are a few used widely these days

Questions and Answer for Data Science

Proficiency in languages like Python and R is crucial for data manipulation, analysis, and visualization. Python’s versatility and extensive libraries make it a popular choice, while R is well-suited for statistical analysis.

Statistical knowledge is fundamental. It helps in designing experiments, understanding distributions, and making meaningful inferences from data. A data scientist should grasp concepts like hypothesis testing, regression, and probability.

Machine learning involves developing algorithms that enable systems to learn patterns from data. It’s essential for creating predictive models, classification, clustering, and making data-driven decisions.

Data preprocessing involves cleaning, transforming, and organizing raw data into a usable format. It’s critical as high-quality data is necessary for accurate analysis and modeling.

Data visualization tools like Matplotlib, Seaborn, and Tableau help in creating meaningful graphs and charts to communicate insights effectively. Visualization enhances understanding and aids in decision-making.

Domain knowledge is crucial for understanding the context of the data. It enables data scientists to ask relevant questions, design appropriate analyses, and interpret results in a way that aligns with real-world scenarios.

Big data technologies like Hadoop, Spark, and NoSQL databases enable the processing and analysis of massive datasets that traditional tools might struggle with. Knowledge of these technologies is beneficial for handling large-scale data.

A common approach involves defining the problem, gathering and preparing data, exploratory data analysis, feature engineering, model selection and training, evaluation, and deployment. Effective project management and communication are also crucial.

Soft skills such as communication, collaboration, and critical thinking are vital. Data scientists need to explain complex results to non-technical stakeholders, work in teams, and approach problem-solving creatively.

Continuous learning is essential. Data scientists can read research papers, attend conferences, take online courses, and participate in online communities. Staying up-to-date with the latest tools, techniques, and trends is crucial for success.